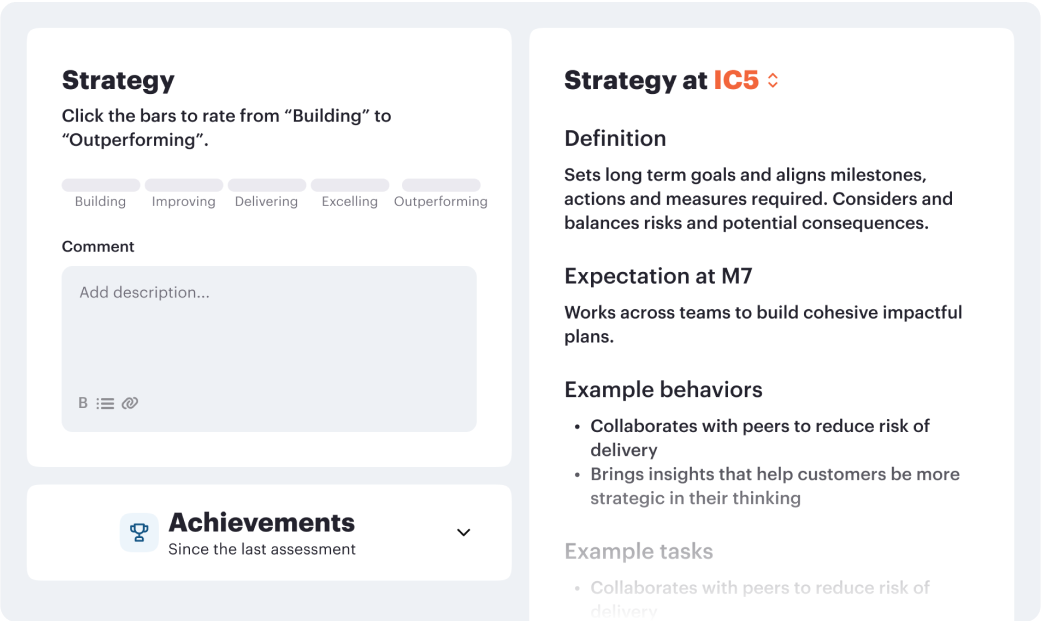

How meetings can drive structured performance based checkins to keep employees performance on track.

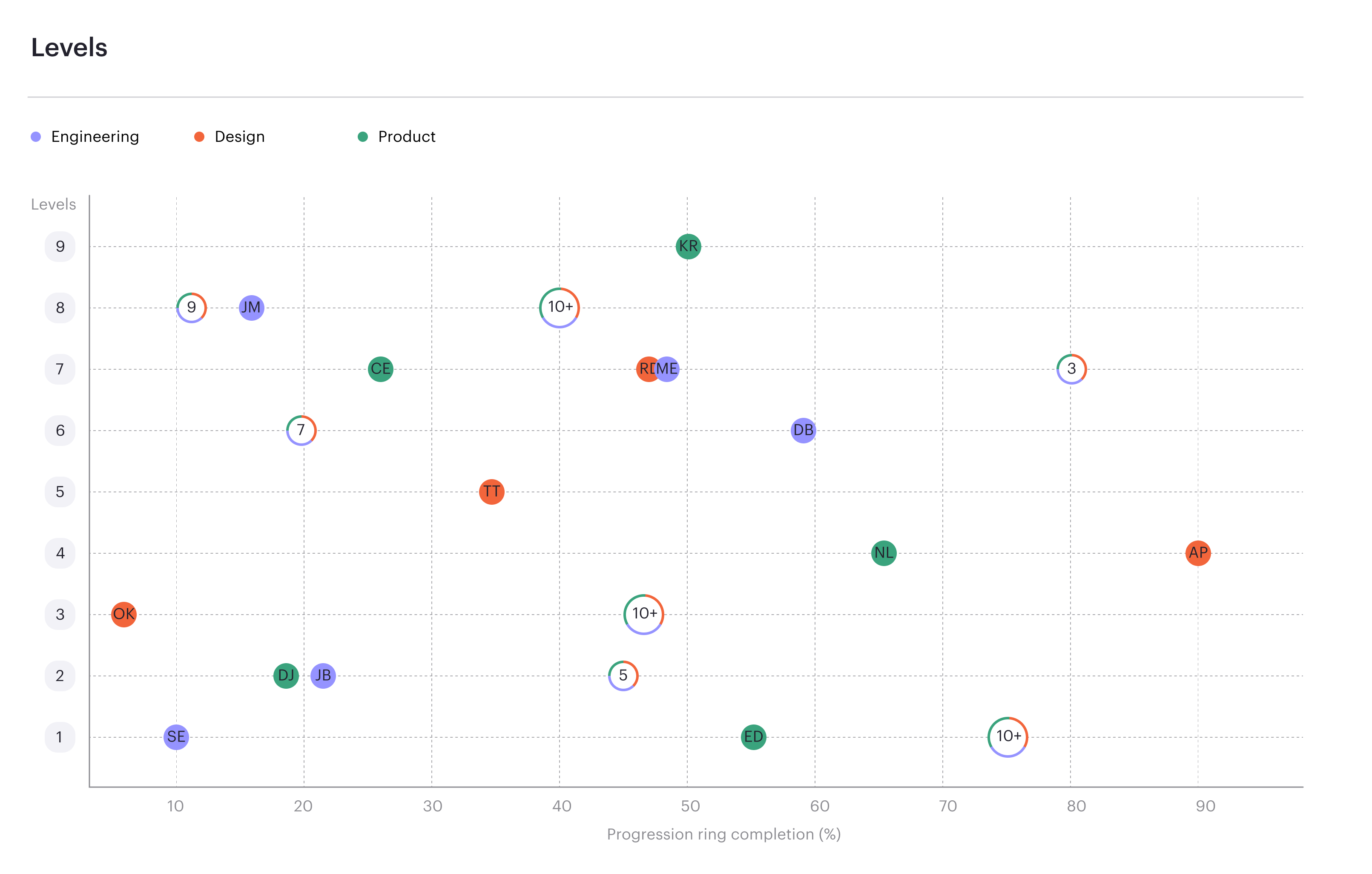

Read moreContinuous progression drives performance.

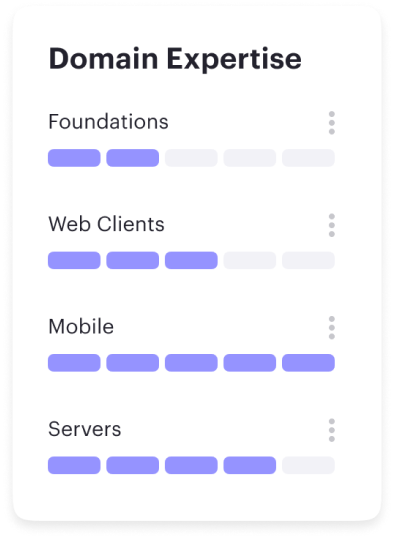

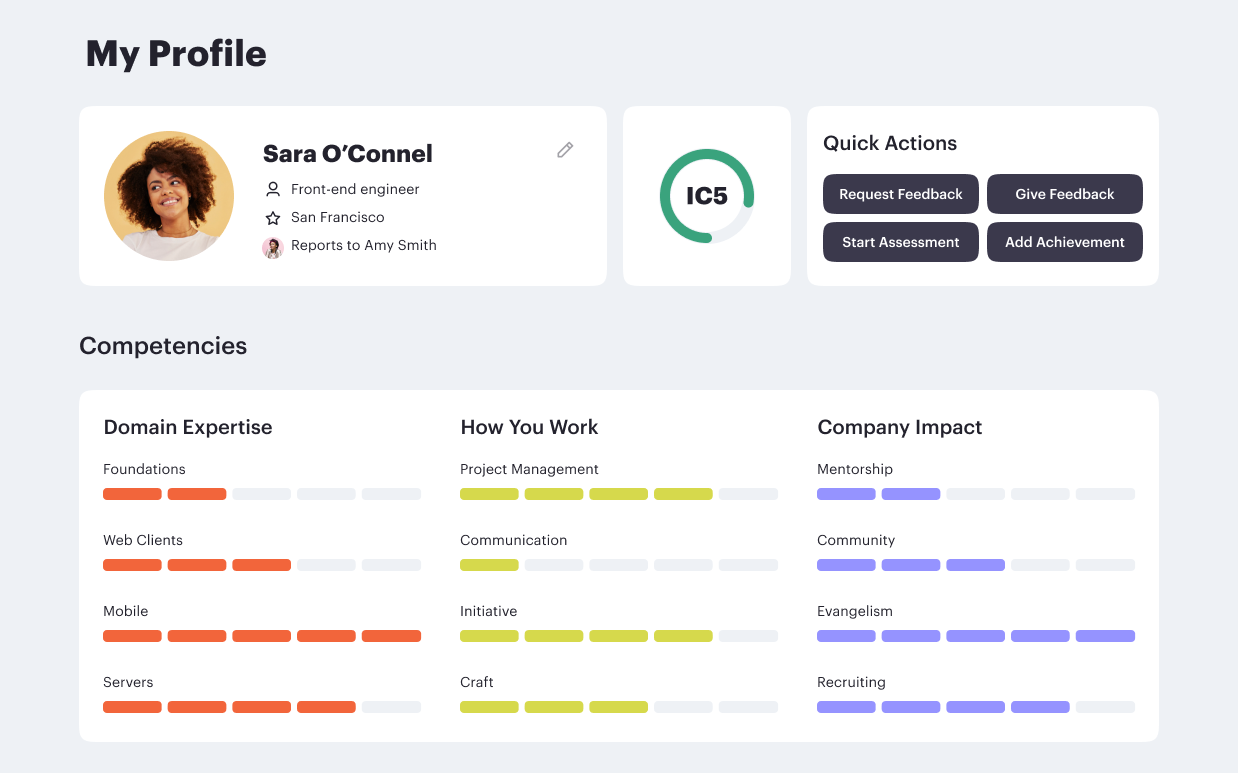

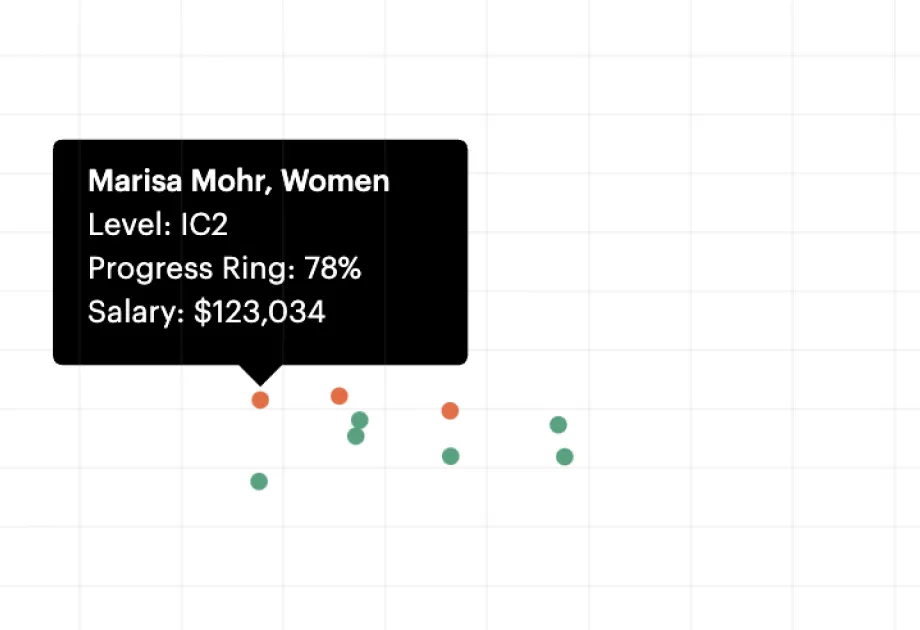

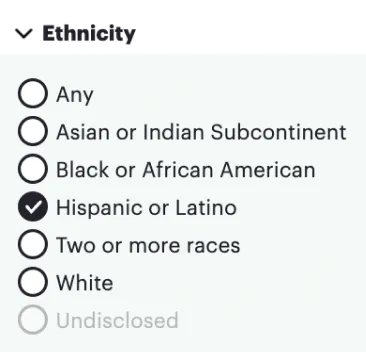

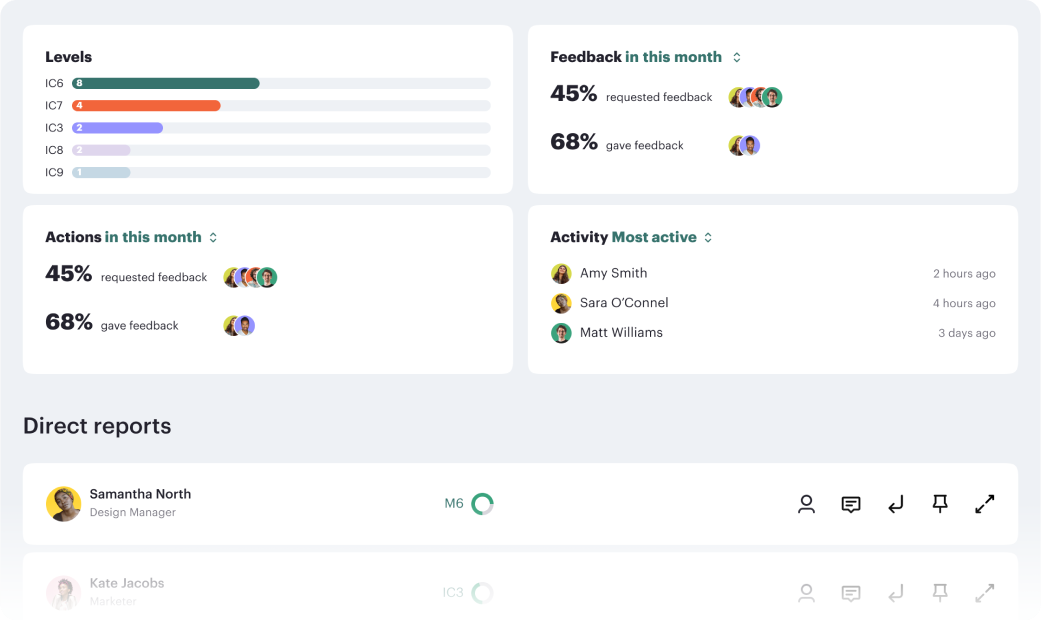

Career frameworks, feedback and goals to structure, measure and accelerate business impact.

Request demo

%201.webp)